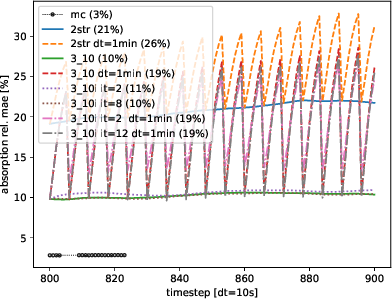

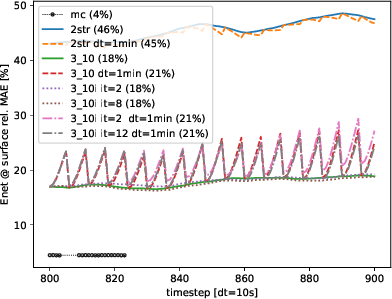

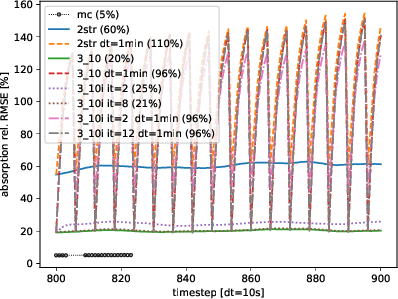

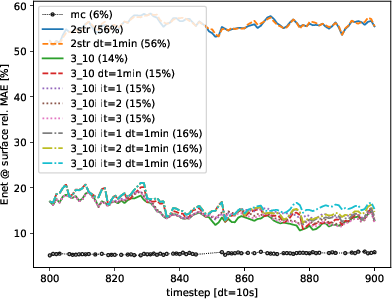

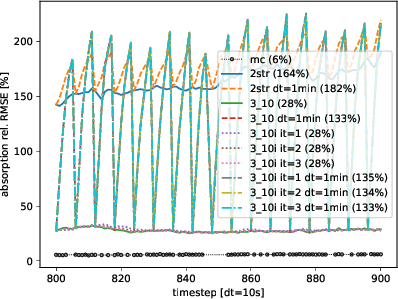

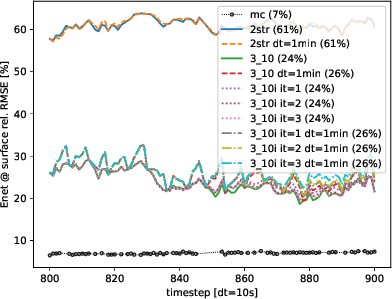

I ran an experiment that was prepared by Richard.Maier, namely run offline radiative transfer computations of a high resolution cumulus cloud field with high temporal resolution. The experiment is run with a 25m horizontal resolution at timesteps of 10sec output. The solar zenith is changing from 41 deg to 35 deg over the course of the simulation and the solar azimuth from 123 deg to 127 deg, respectively. The cloud field shows an increase in cloud fraction from .29 to .38. The question I wanted to answer is how many iterations do we need to use to get reasonable results at which radiation call frequency.

The following will briefly outline the experiment:

- Run MonteCarlo benchmark simulations at a 10 sec interval

- Run incomplete solves with varying number of iterations and compare to the benchmark simulation (solar direct radiation is always solved fully, i.e. only diffuse solver changes iteration count)

- Run computations on a reduced temporal sampling frequency, e.g. call radiation every n’th timestep and keep radiation field constant inbetween

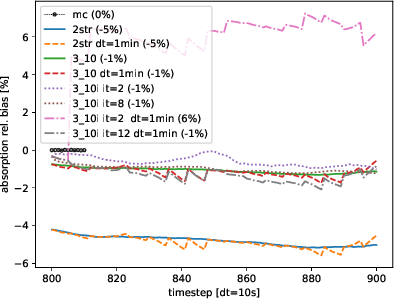

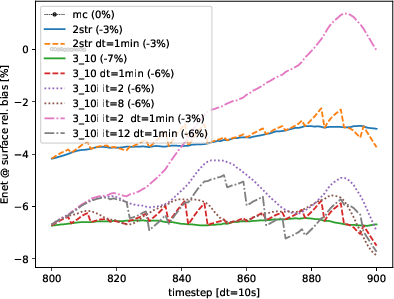

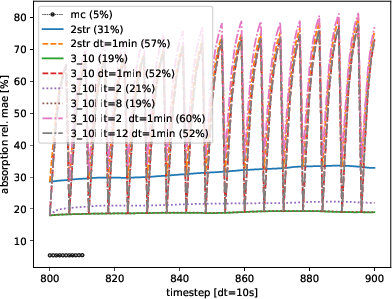

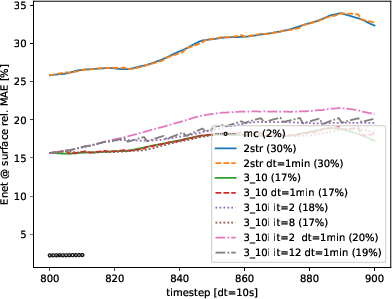

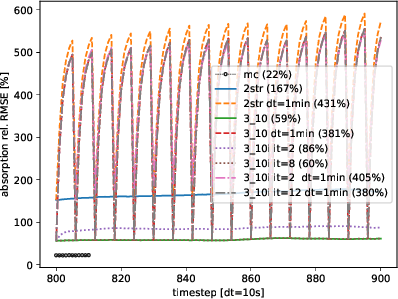

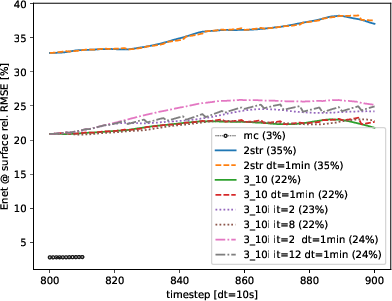

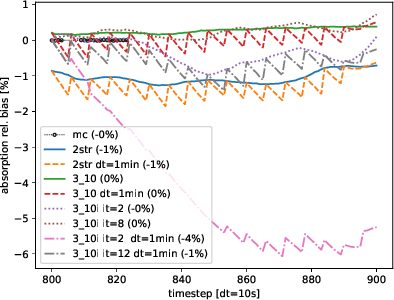

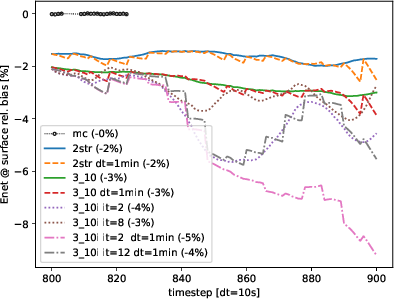

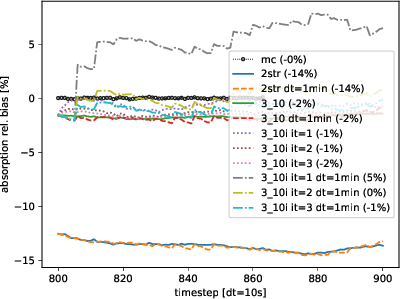

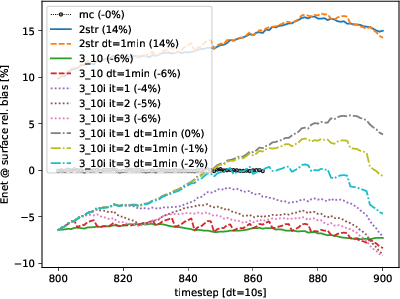

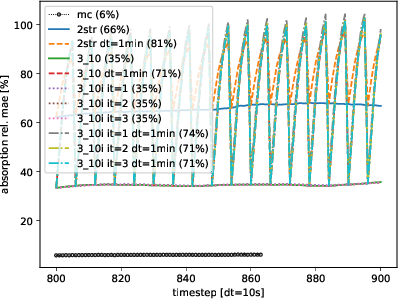

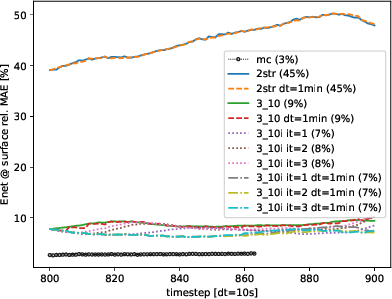

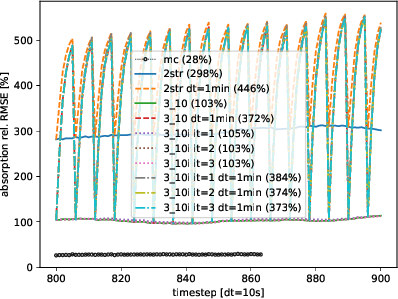

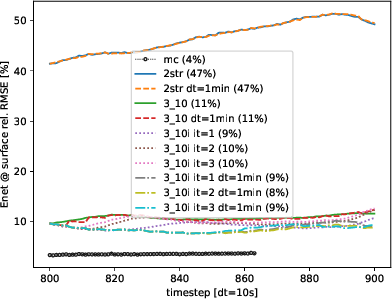

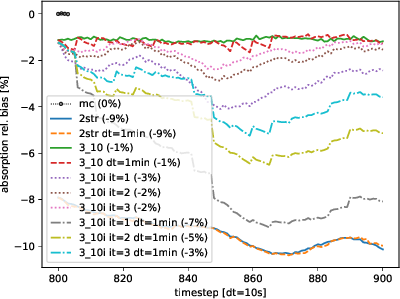

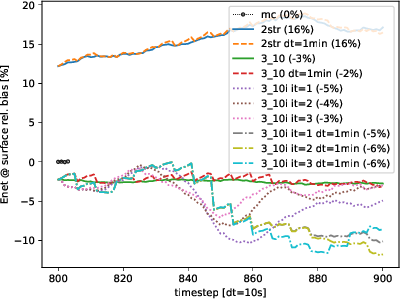

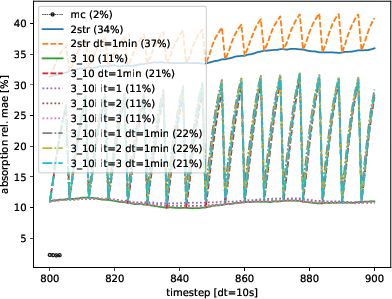

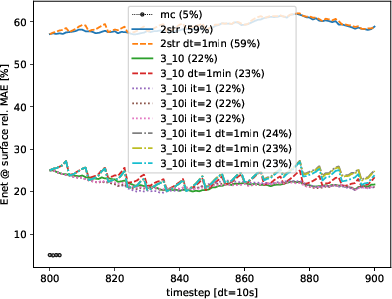

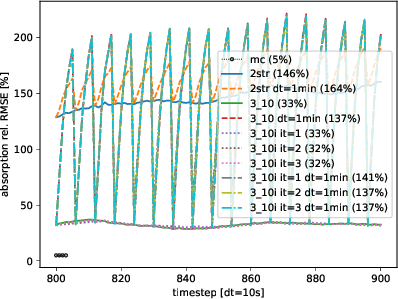

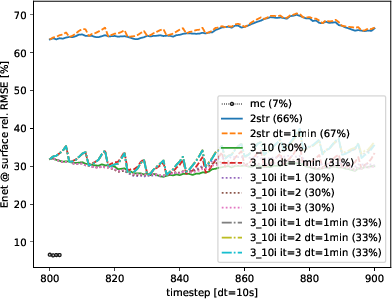

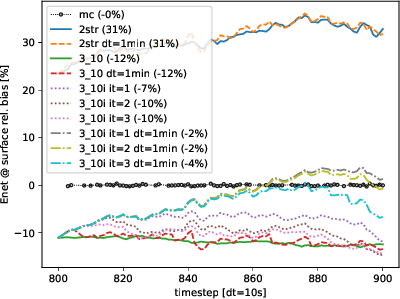

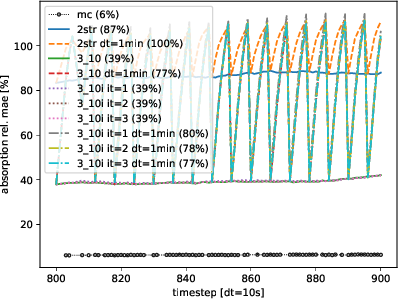

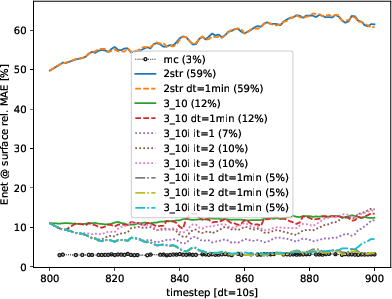

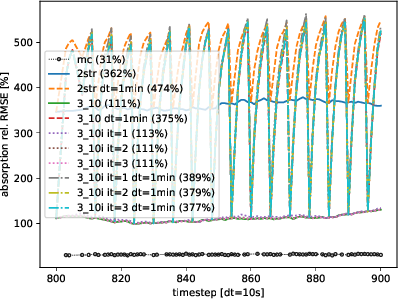

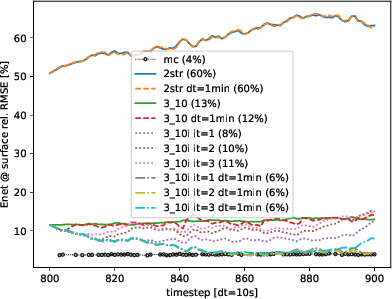

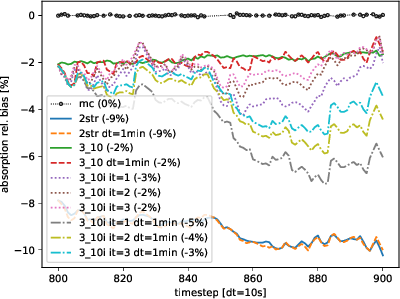

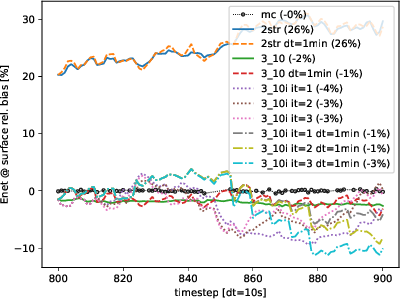

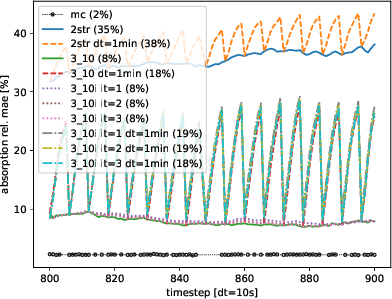

The following plots give error metrics for atmospheric absorption and surface net heating. Mean error metric is given in the legend.

Discussion: Not yet but in progress under Richards guidance.

Longwave results

Shortwave results

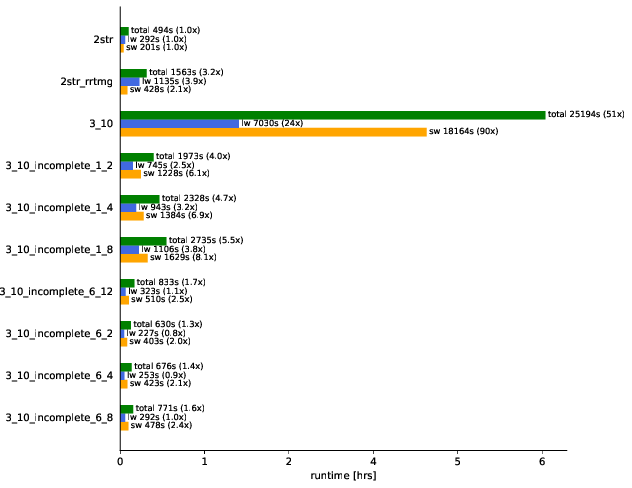

Performance analysis

CPU timings for

- 2str with repwvl

- 2str with rrtmg

- full solves with TenStream

- Tenstream incomplete solves where first number is the radiation calling frequency and second the number of SOR iterations per radiation call

———————————————————————-

Coarse grained results

The following results are the same setup except that the cloud fields are sub sampled by a factor of 4 or 8. Note, however, that the horizontal resolution is kept at 25m. We just take every n’th column to reduce the computational burden.